For all their power, GenAI chatbots have hidden weaknesses that adversaries are actively searching for. That one unprotected spot can undo all your ambition, leading to financial loss, brand damage and legal liability.

This is where AI red teaming becomes essential. It’s the practice of simulating adversarial attacks to find weaknesses in AI systems before they can be exploited.

Wondering where “secure GenAI chatbots with AI red teaming” should fall on your priority list? The answer lies in the AI security assumptions your teams make every day.

Read on to see if you’re relying on common assumptions that can leave you exposed.

Why GenAI chatbot security demands immediate attention

- 96% of executives believe adopting generative AI makes a security breach likely in their organization. (IBM)

- 74% of IT leaders worry about the security and compliance risks AI presents. (Cloudera)

- Over 50% of technologists are “extremely” or “moderately” concerned about AI threats. (Plural Sight)

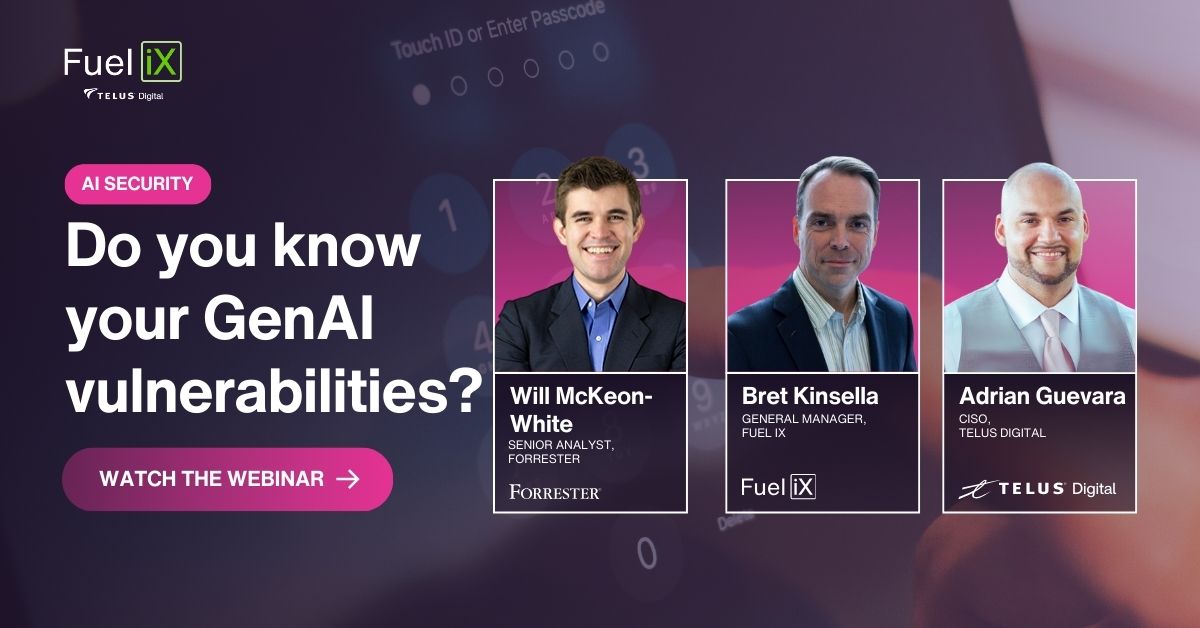

This concern goes beyond internal compliance. As CISO at TELUS Digital, Adrian Guevara notes in Overcoming the GenAI Trust Gap: "No matter how many guardrails we put on a system we release, an adversary may not be using any guardrails at all and pointing that towards you.”

Two fundamental challenges in GenAI security

1. The predictability problem: GenAI is fundamentally different

Traditional software is deterministic — it follows predictable logic. GenAI is probabilistic with a near-infinite range of possible inputs and outputs. This unpredictability creates security gaps that existing tools were never designed to handle. The risk multiplies when these models access sensitive data or critical systems.

2. The human problem: Expertise is scarce

A primary defense against GenAI unpredictability is AI red teaming — hiring experts to find flaws before attackers do. But as Brett Kinsella, GM of Fuel iX at TELUS, points out, “Our friends in the red teaming world are going to continue to be in short supply. And there’s something else that’s a problem. Speed. How fast can you do it? ” Scaling an effective defense that relies on a handful of experts when attacks can be launched at machine speed is going to be a challenge, to say the least.

Given the unpredictability, talent scarcity and need for speed, CISOs like Guevara are looking to automation to gain the advantage of AI red teaming. “I’m looking for ways to automate this," says Guevara. “How are we automating this in line as we’re developing a chatbot? What are we doing to periodically test this? I want to see an automated test that runs regularly and shows performance over a large test bed — so we can decide if the risk is too high or acceptable.”

Five flawed assumptions that leave GenAI chatbots vulnerable

Despite the clear and legitimate risks, many organizations delay essential GenAI chatbot security measures like AI red teaming. This inaction is often driven by a few common, yet flawed, assumptions that can put GenAI chatbots at risk.

Assumption 1: "Our AI is low-risk and only for internal use.”

- Reality check: Internal doesn't mean safe. A chatbot connected to internal systems (Slack, Google Drive, etc.) can still expose sensitive data, PII or IP. One malicious prompt can cause a data leak or compliance violation. All AI requires governance.

- AI red teaming advantage: Proactively assesses these systems to meet current and future regulatory requirements.

Assumption 2: "We use a trusted platform like OpenAI or Google.”

- Reality check: Platform safety isn't application safety. While providers secure their models, you’re responsible for how your specific application uses them. They don't know your data, your rules or your unique risks.

- AI red teaming advantage: Reveals gaps your platform provider can't see by testing your specific data, prompts and guardrails.

Assumption 3: "Our existing security measures are sufficient.”

- Reality check: GenAI creates a new class of vulnerabilities. Traditional security tools aren't built to find prompt injections, data leakage or model hallucinations.

- AI red teaming advantage: Adds critical AI-specific expertise to your security team. Automation can also detect emergent vulnerabilities that manual testing might miss

Assumption 4: "It's too early, and it will slow us down.”

- Reality check: Security isn't a roadblock — it’s an accelerator. Finding a vulnerability in the prototype stage is far cheaper and easier than fixing a public-facing disaster.

- AI red teaming advantage: Integrates directly into the development lifecycle, letting you run thousands of automated tests to deploy faster and with confidence.

Assumption 5: "We don’t need ongoing testing.”

- Reality check: AI isn't static. A model's behavior can drift over time. New user interactions can create new edge cases and misuse patterns can emerge. AI safety isn’t a one-time check — or a test-it-once-before-launch exercise — it’s a continuous process, just like security monitoring.

- AI red teaming advantage: Provides continuous monitoring and benchmarking to track your security posture and demonstrate improvement to stakeholders.

AI red teaming implementation benefits

GenAI chatbots create novel security vulnerabilities. Whether you rely on human AI red teams, automated platforms, or both, the mandate is the same: test like an adversary, early and often.

Implementing AI red teaming as a "chatbot vulnerability tester" allows you to:

- Stay ahead of emerging threats

- Protect bottom line and brand

- Navigate evolving compliance

- Build hard-to-break trust

The question isn't whether you need to secure genAI chatbots with AI red teaming; it's whether you'll implement it before or after a costly incident.

Is it better to plan for resilience or failure? Watch the webinar to get Forrester’s recommendation.